1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

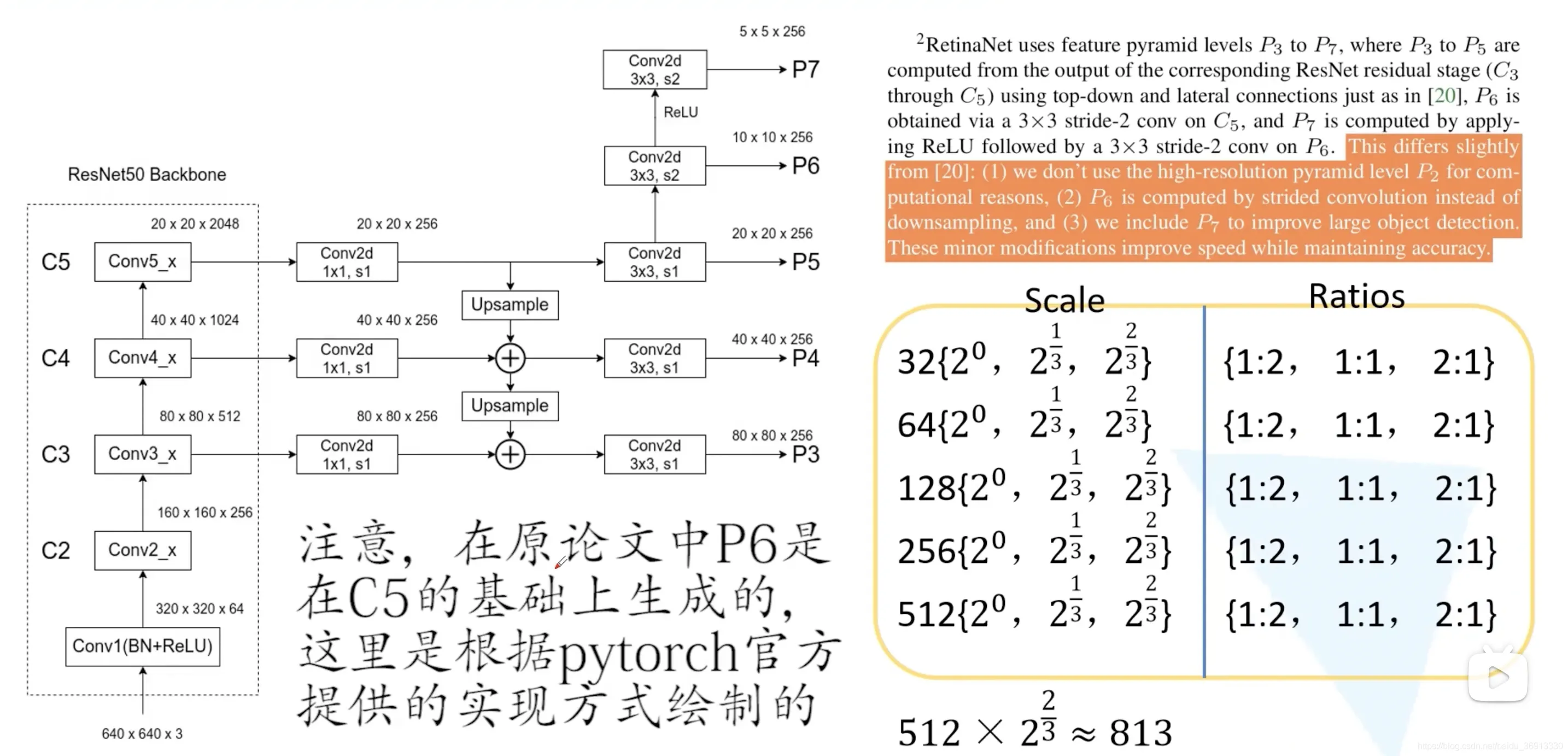

| import torch.nn as nn

import torch

import math

import torch.utils.model_zoo as model_zoo

from torchvision.ops import nms

from retinanet.utils import BasicBlock, Bottleneck, BBoxTransform, ClipBoxes

from retinanet.anchors import Anchors

from retinanet import losses

class PyramidFeatures(nn.Module):

def __init__(self, C3_size, C4_size, C5_size, feature_size=256):

super(PyramidFeatures, self).__init__()

self.P5_1 = nn.Conv2d(C5_size, feature_size, kernel_size=1, stride=1, padding=0)

self.P5_upsampled = nn.Upsample(scale_factor=2, mode='nearest')

self.P5_2 = nn.Conv2d(feature_size, feature_size, kernel_size=3, stride=1, padding=1)

self.P4_1 = nn.Conv2d(C4_size, feature_size, kernel_size=1, stride=1, padding=0)

self.P4_upsampled = nn.Upsample(scale_factor=2, mode='nearest')

self.P4_2 = nn.Conv2d(feature_size, feature_size, kernel_size=3, stride=1, padding=1)

self.P3_1 = nn.Conv2d(C3_size, feature_size, kernel_size=1, stride=1, padding=0)

self.P3_2 = nn.Conv2d(feature_size, feature_size, kernel_size=3, stride=1, padding=1)

self.P6 = nn.Conv2d(C5_size, feature_size, kernel_size=3, stride=2, padding=1)

self.P7_1 = nn.ReLU()

self.P7_2 = nn.Conv2d(feature_size, feature_size, kernel_size=3, stride=2, padding=1)

def forward(self, inputs):

C3, C4, C5 = inputs

P5_x = self.P5_1(C5)

P5_upsampled_x = self.P5_upsampled(P5_x)

P5_x = self.P5_2(P5_x)

P4_x = self.P4_1(C4)

P4_x = P5_upsampled_x + P4_x

P4_upsampled_x = self.P4_upsampled(P4_x)

P4_x = self.P4_2(P4_x)

P3_x = self.P3_1(C3)

P3_x = P3_x + P4_upsampled_x

P3_x = self.P3_2(P3_x)

P6_x = self.P6(C5)

P7_x = self.P7_1(P6_x)

P7_x = self.P7_2(P7_x)

return [P3_x, P4_x, P5_x, P6_x, P7_x]

|